Perceptar — A Speculative Interface for Spatial Imagination

Technical Stack

Perceptar is an experimental interface that explores how the body, space, and interaction can form a new grammar of creation. Set in a responsive virtual environment, the system invites users to reshape themselves, manipulate objects from a distance, and traverse space beyond scale—using only gesture, gaze, and presence.

Rather than presenting space as a static backdrop, Perceptar turns it into a living, reactive medium. Users are guided by a conversational system that responds like a companion—teaching through dialogue, encouraging exploration, and eventually stepping back as users build worlds of their own.

This project is inspired by cinematic storytelling, speculative interfaces, and embodied interaction research. It asks not how we can design in space, but how space can become a language—one that remembers, responds, and evolves.

Research & Development Process

Exploring embodied interactions in virtual spatial design

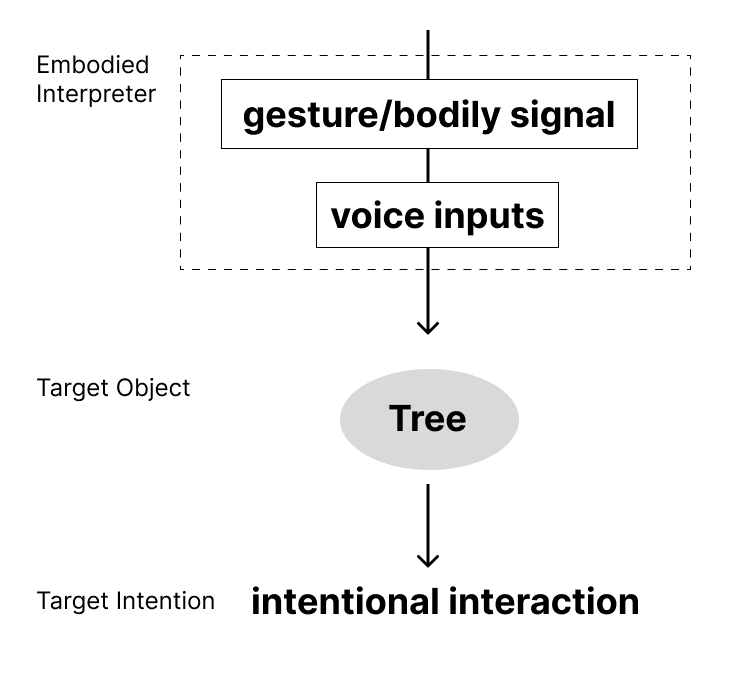

Defining natural interaction through bodily inputs, context understanding, and verbal commands

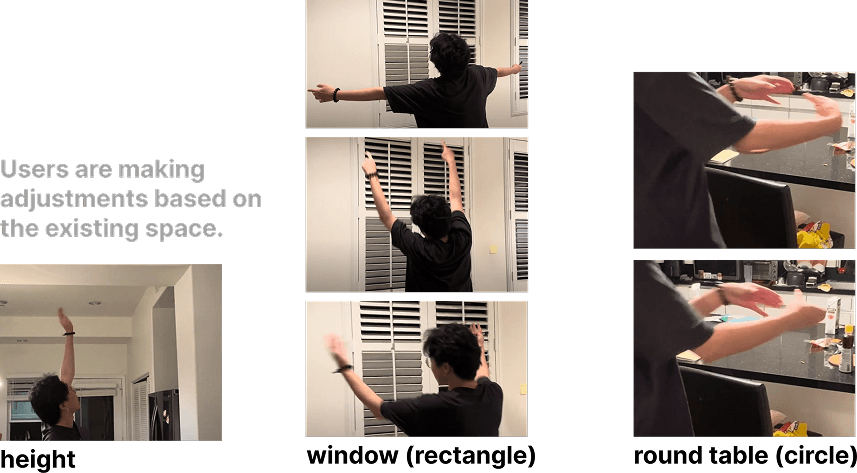

Users making spatial adjustments through intuitive body movements and gestures

Mapping function translating user's mental model to actual spatial coordinates

Visual Design

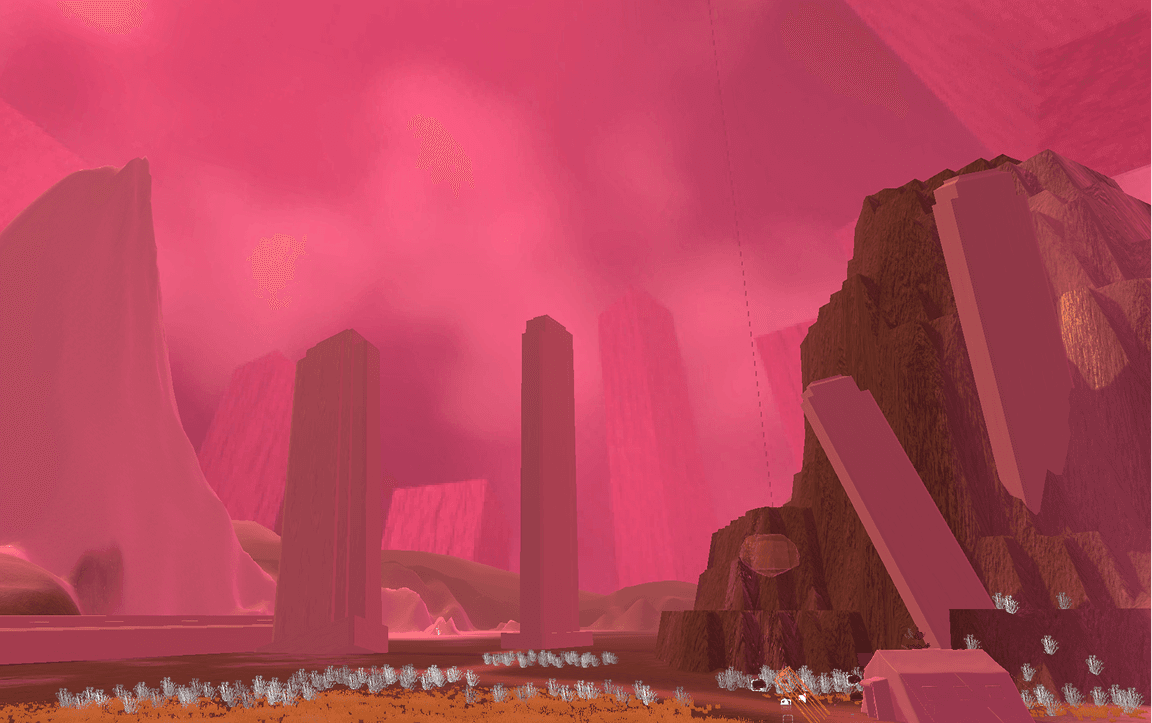

Early visual design concepts exploring the interface aesthetics

Refined visual language for the spatial interaction system

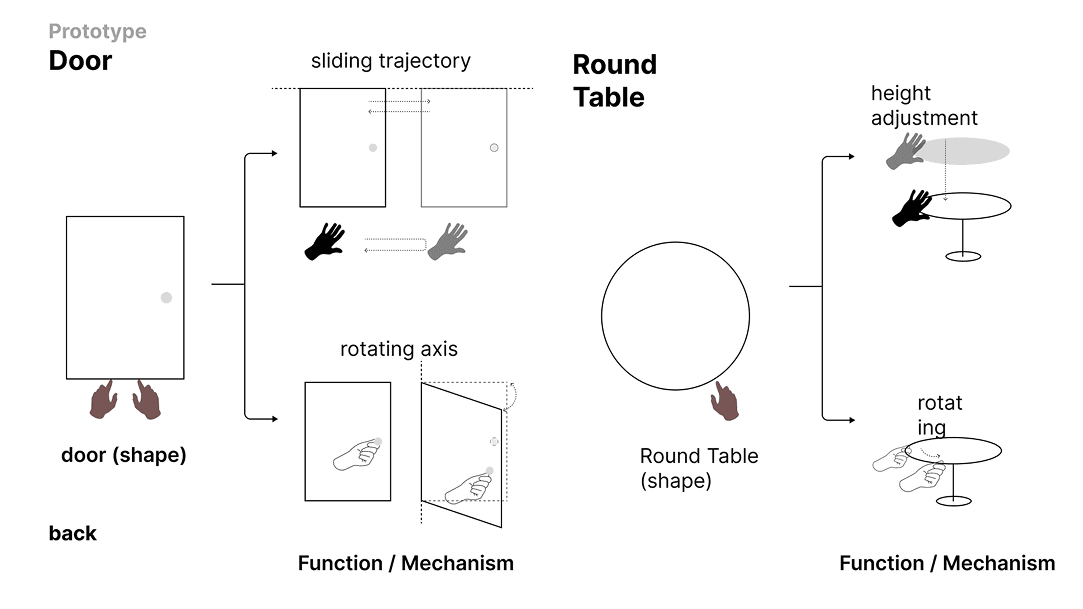

Prototype Evolution

Prototype 1: Gesture Recognition & Auto-Smoothing

The first prototype focused on understanding how users naturally draw in 3D space. We implemented an auto-smoothing algorithm that detects symmetrical patterns in user gestures, automatically refining rough drawings into perfect rectangles or circles.

This system recognizes the intent behind the gesture rather than requiring precise movements, making spatial creation more intuitive and forgiving.

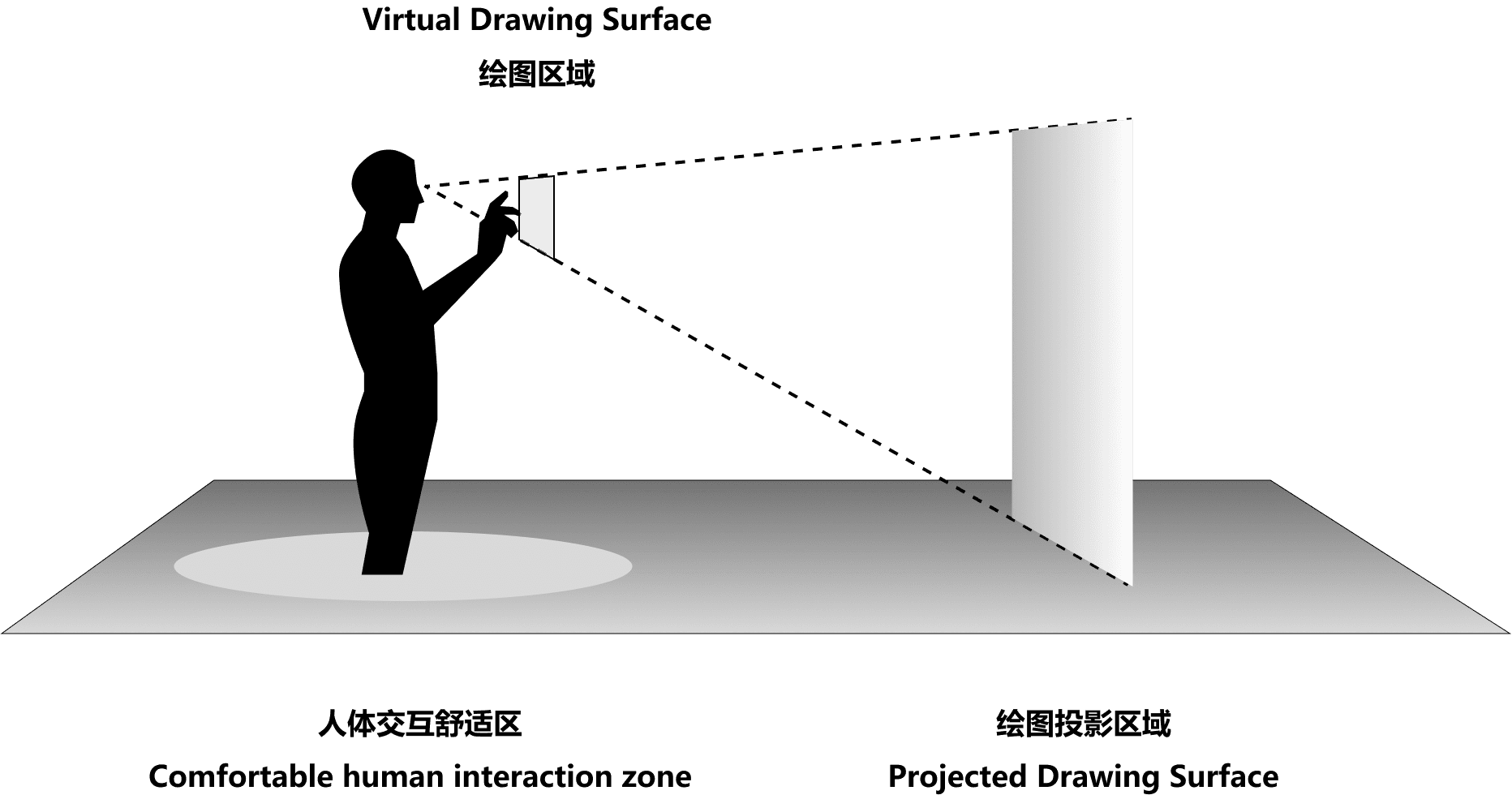

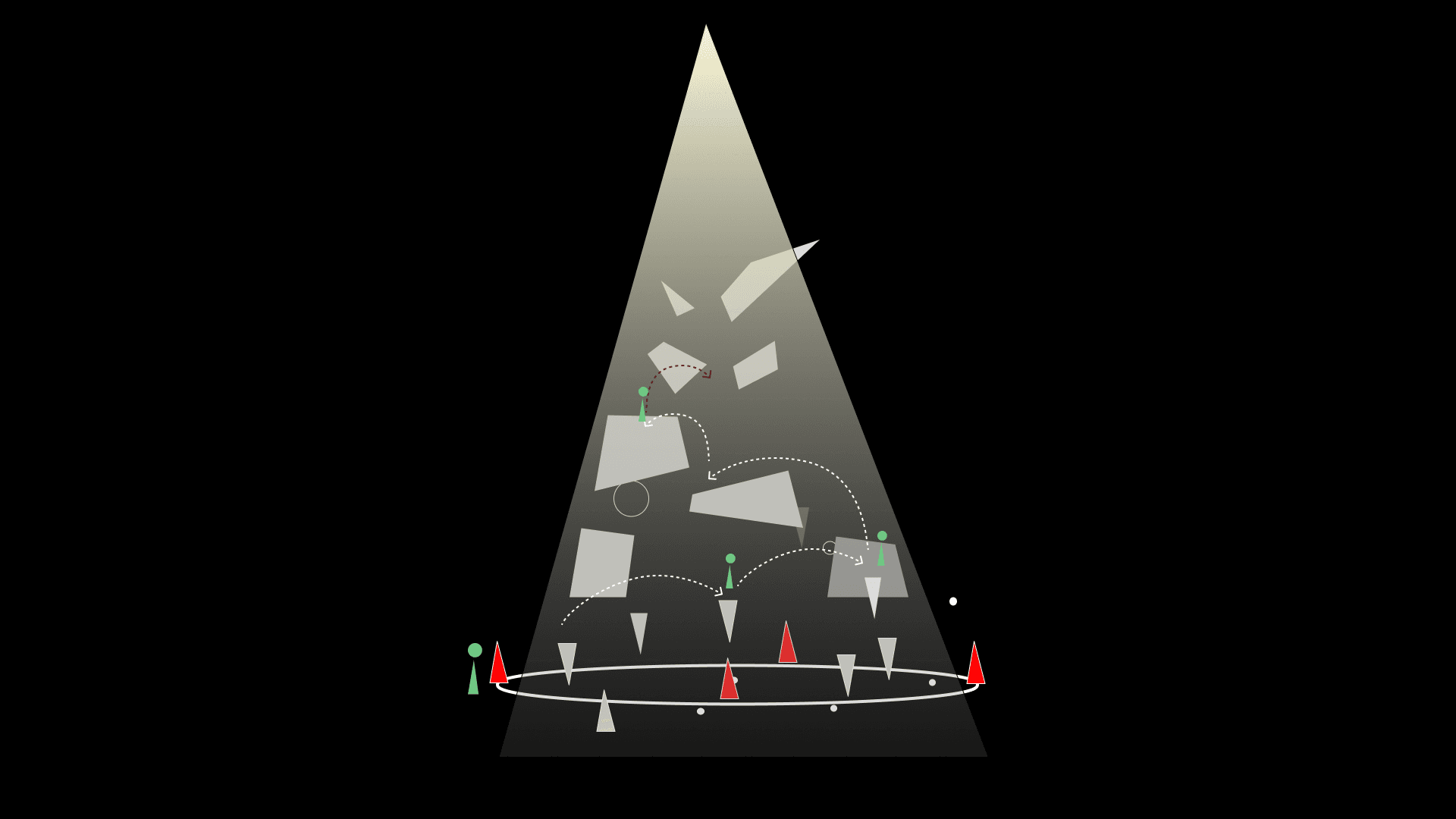

Prototype 2: Mental-to-Physical Space Mapping

Our research revealed that users often draw based on their mental model of space rather than physical reality. Prototype 2 introduced a sophisticated mapping function that translates gestures from the user's perspective to the correct position in virtual space.

This allows users to draw walls and structures as they imagine them, with the system handling the spatial translation—bridging the gap between mental conception and virtual creation.

Prototype 3: Perceptar - Full Functional Experience

The culmination of our research, Perceptar creates a complete spatial imagination environment where users can experience the full range of embodied interactions. Key features include:

- Distance control for manipulating objects regardless of proximity

- Object transformation controls, including the ability to scale and "explode" large structures

- Free-form drawing to create "keystones"—platforms that users can traverse

- Integrated spatial audio that responds to user creations and movements

This prototype demonstrates how spatial design can become an intuitive language when the interface adapts to human perception rather than forcing users to adapt to technology.

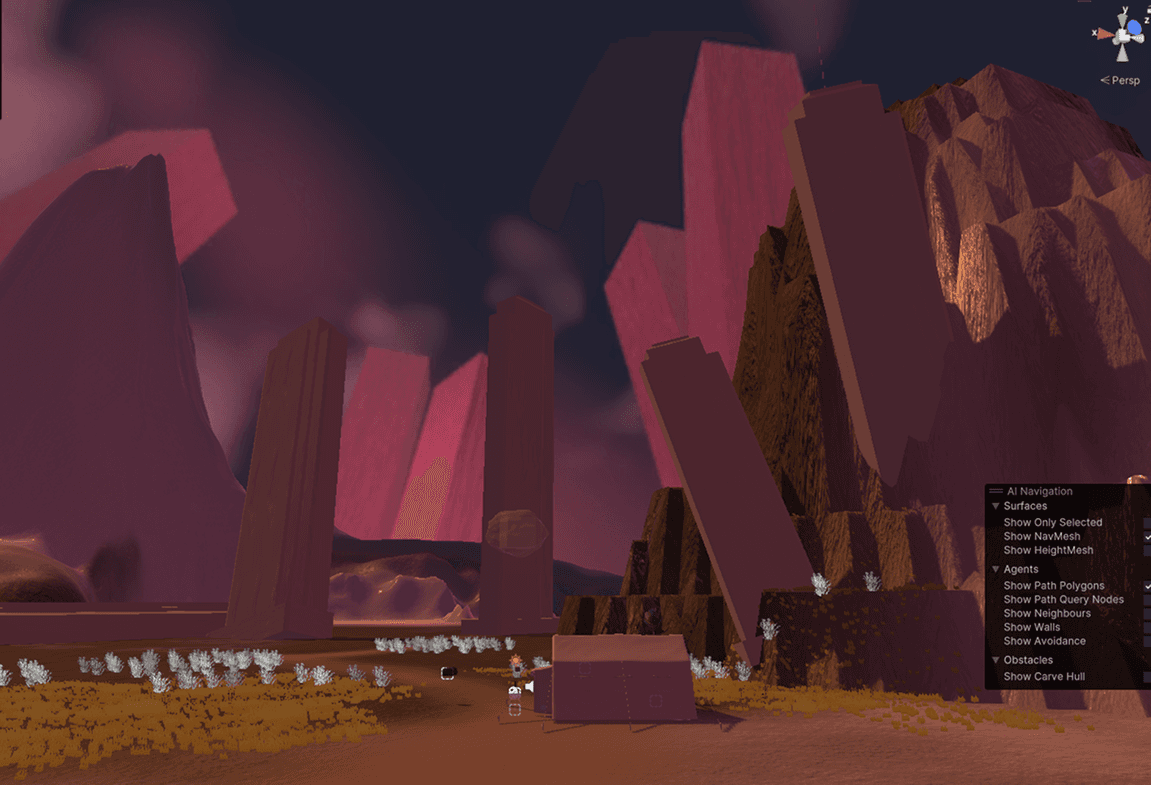

System Architecture

The Perceptar system integrates multiple components to create a seamless spatial design experience:

- Gesture recognition system with symmetry detection

- Mental-to-physical space mapping algorithm

- Spatial audio engine that responds to user creations

- Conversational AI guide that adapts to user behavior

This architecture allows for a fluid experience where users can focus on creation rather than learning complex controls.

🔍 Core Features

- Embodied interaction: gesture-based scaling, drawing, teleportation

- Narrative AI system: reactive voice-driven guide

- Multi-scale environment: from intimate space to cosmic architecture

- Free creation mode: no goals, no rules—just your spatial grammar

🛠 Built with

Unity · VR Interaction Toolkit · Custom Dialogue Engine · Spatial Sound Design

Research Focus

Defining 'Natural Interaction' in Virtual Spatial Design

What are the design and research avenues for creating more 'natural interactions' in virtual spatial design?

This involves exploring how users can engage with virtual environments in ways that feel intuitive and satisfying, emphasizing the natural integration of human gestures, cognition, and technology.

Applying 'Natural Interaction' to a Spatial Design Tool

How can we integrate natural interactions into a spatial design tool to enable users to build worlds with satisfaction?

The tool should enhance the user's creative experience by providing seamless, intuitive interaction methods that feel aligned with how humans naturally interact with physical space.

Challenges and Opportunities

Subjectivity:

Designing for subjectivity means acknowledging that users may have different approaches to creating virtual spaces. A flexible tool must accommodate various design philosophies and personal styles.

Cultural Understanding:

Different cultural interpretations of gestures and interactions may require adaptable systems that can account for these variations in user behavior.

Previous Projects

- Gesture Recognition

- Building Game

- AR Place

Current Progress

- Insights: gesture + function

- Prototype: VR → Draw rectangle/circle

Future Directions for Discussion

Adaptive Systems - A system can learn.

How can a system learn user intentions? The next step is to design adaptive systems that evolve based on user behavior, helping users refine their interactions over time.

Verbal Cues/Prompts - Embodiment

Introducing verbal inputs to refine AI algorithms and enhance context understanding, allowing users to interact with the system using both gestures and voice commands.

Research Thesis

For a deeper understanding of the theoretical framework and research methodology behind Perceptar, you can download my complete thesis paper which explores the intersection of embodied cognition, spatial design, and virtual reality interfaces.

Download Thesis Paper (PDF)